GDE Dynamics Plots

Mathwrist User Guide Series

Abstract

This document is a user guide of using Mathwrist’s Graphical Debugging Extension (GDE) tool to visualize the dynamic process of certain numerical algorithms implemented in NPL. NPL is Mathwrist’s C++ numerical programming library. By visualizing how a mathematical problem is tackled numerically, scientists and engineers might be able to refine problem formulation, undstand model behavior or identify implementation issues easier.

1 Introduction

GDE dynamics display is designed to watch how certain quantities of interest evolve over time or iteratively make progress. NPL typically implements a numerical algorithm as a specific C++ solver class. Like all other NPL “plottable” types, a solver object that supports dynamics display is recognized by GDE at debugging time.

As usual, users can right click the variable name x of a solver object in Visual Studio debugger and then click context menu command “plot x” to triger the dyanmics display. However, a dynamics plottable object is not managed or stored by any of the GDE data, curve or surface sessions. Instead, it is a one-time animation, specific to the nature of the dynamics. Once the animation is complete, users can perform other visualization tasks as usual.

All NPL C++ classes that support GDE dynamics display have a public member function enable_watch(). Users need call this function in a C++ program to enable watching the dynamics. For the details of NPL, please refer to NPL white paper, API and code example documentation series. It is recommended to read through our “GDE Overview” user guide first to have a high level overview of GDE.

2 NPL Plottable Types

Root finding solver mathwrist::RootSolver1D and 1-d minimization solver mathwrist::MiniSolver1D are two dynamic plottables that users can watch how data points approach to the final solution along a smooth curve. This kind of dynamics plot effectively is a standard curve display with moving data points. It shares the same display control settings. i.e. margins, font size, etc., of the GDE curve session.

The second type of dynamics plot is to visualize multi-dimensional unconstrained optimization with the following solver classes.

-

•

mathwrist::LineSearchSteepestDescent

-

•

mathwrist::LineSearchNewton

-

•

mathwrist::LineSearchQuasiNewton

-

•

mathwrist::LineSearchConjugateGradient

-

•

mathwrist::TrustRegionExact

-

•

mathwrist::TrustRegionSteihaug

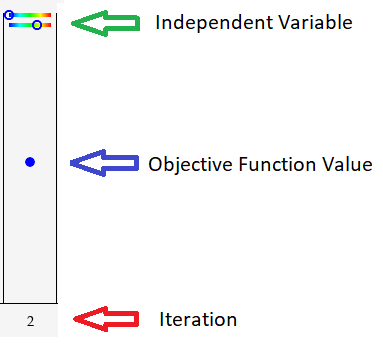

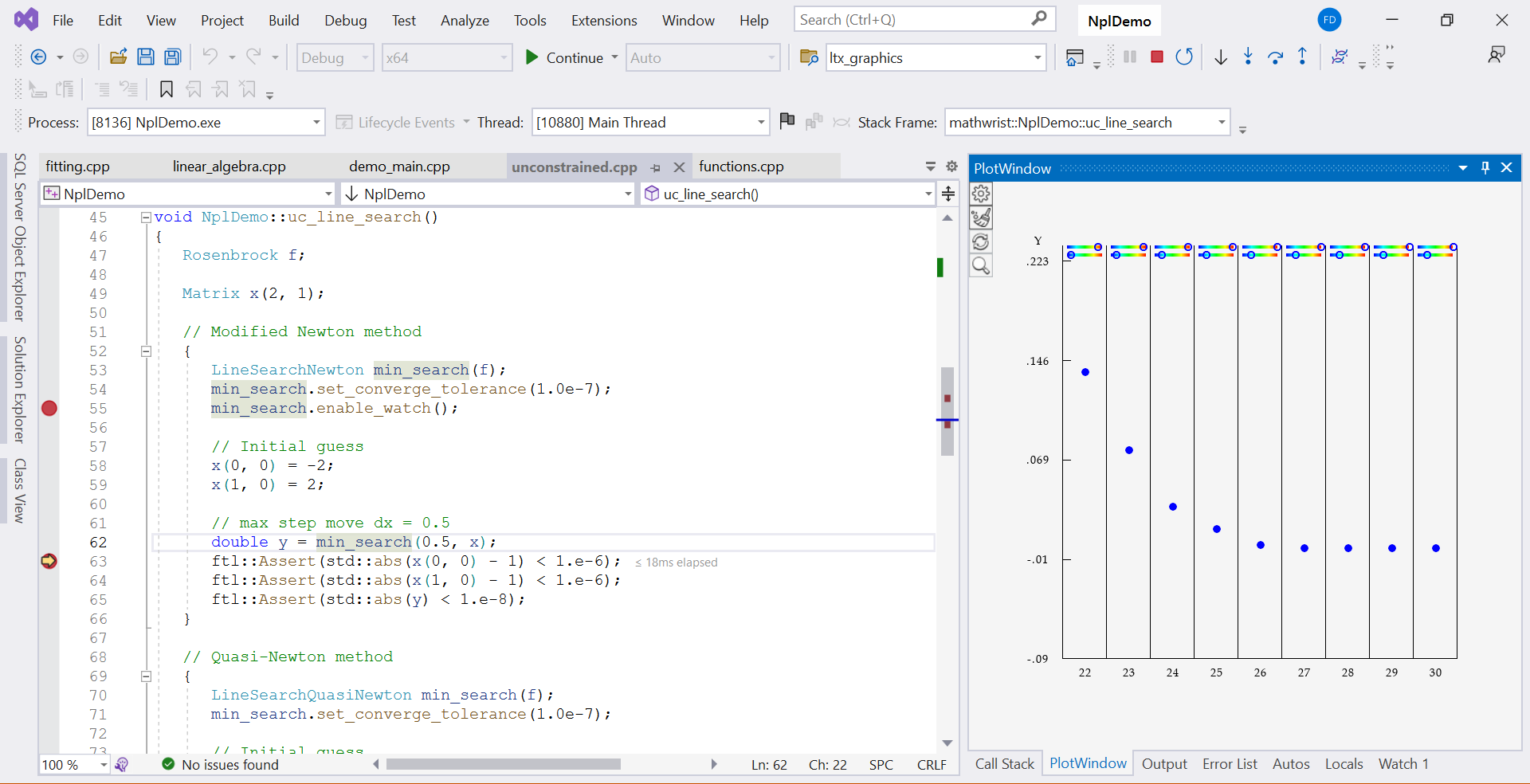

GDE displays optimization iterations as a sequence of frames. Users can watch the n-dimensional variable and objective function change values across iteration frames. Figure 1 is such a frame example. and its numerical range are displayed at the top of the frame as small circles inside color gradient strips. If space accommodates, dimensional will display color gradient strips corresponding to starting from the top. Objective function value is displayed as the blue dot in the middle of Figure 1. Its position along the axis indicates the value of . Lastly, the iteration number is display at the bottom of the frame.

The third type of dynamics plottable includes the following constrained optimization solver classes. They also use a sequence of frames to represent optimization iterations. In general, linearly constrained optimization solvers have a phase-I feasibility stage and a subsequent optimality stage. In phase-I stage, the objective is to reduce constraint violation. GDE first uses red dots to represent the feasibility objective and switches back to blue dots in the optimality stage. If an independent variable has a lower/upper bound, GDE will in addition display a short vertical bar at the left/right end of the color gradient respectively.

-

•

mathwrist::GS_SimpleBoundNewton

-

•

mathwrist::GS_SimpleBoundQuasiNewton

-

•

mathwrist::GS_ActiveSetNewton

-

•

mathwrist::GS_ActiveSetQuasiNewton

-

•

mathwrist::Simplex

-

•

mathwrist::LP_ActiveSet

-

•

mathwrist::QP_ActiveSet

-

•

mathwrist::SQP_ActiveSet

Solving a nonlinear programming problem using the mathwrist::SQP_ActiveSet solver class involves major iterations and minor iterations. GDE dyanmics plot in this case will only display the original objective in major iterations. Unlike linearly constrained optimization, in SQP major iterations could be increasing or fluctuating. This is due to the nature that a SQP major iteration could be correcting the violation to nonlinear constraints at the cost of increasing .

Lastly, the following model, curve and surface fitting solver classes are supported in GDE for dynamics display as well. However, please note that certain problem formulation has a closed-form solution and does not involve optimization iterations. In that situation, even though an object is of a legitimate solver type, GDE will not add a dynamics plot command to Visual Studio debugging context menu.

-

•

mathwrist::NLS_GaussNewton

-

•

mathwrist::NLS_Levenberg

-

•

mathwrist::NLS_General

-

•

mathwrist::SmoothBilinearSurface

-

•

mathwrist::SmoothChebyshevCurve

-

•

mathwrist::SmoothChebyshevSurface

-

•

mathwrist::SmoothSplineCurve

-

•

mathwrist::SmoothSplineSurface

3 Walkthrough Examples

3.1 Root Finding

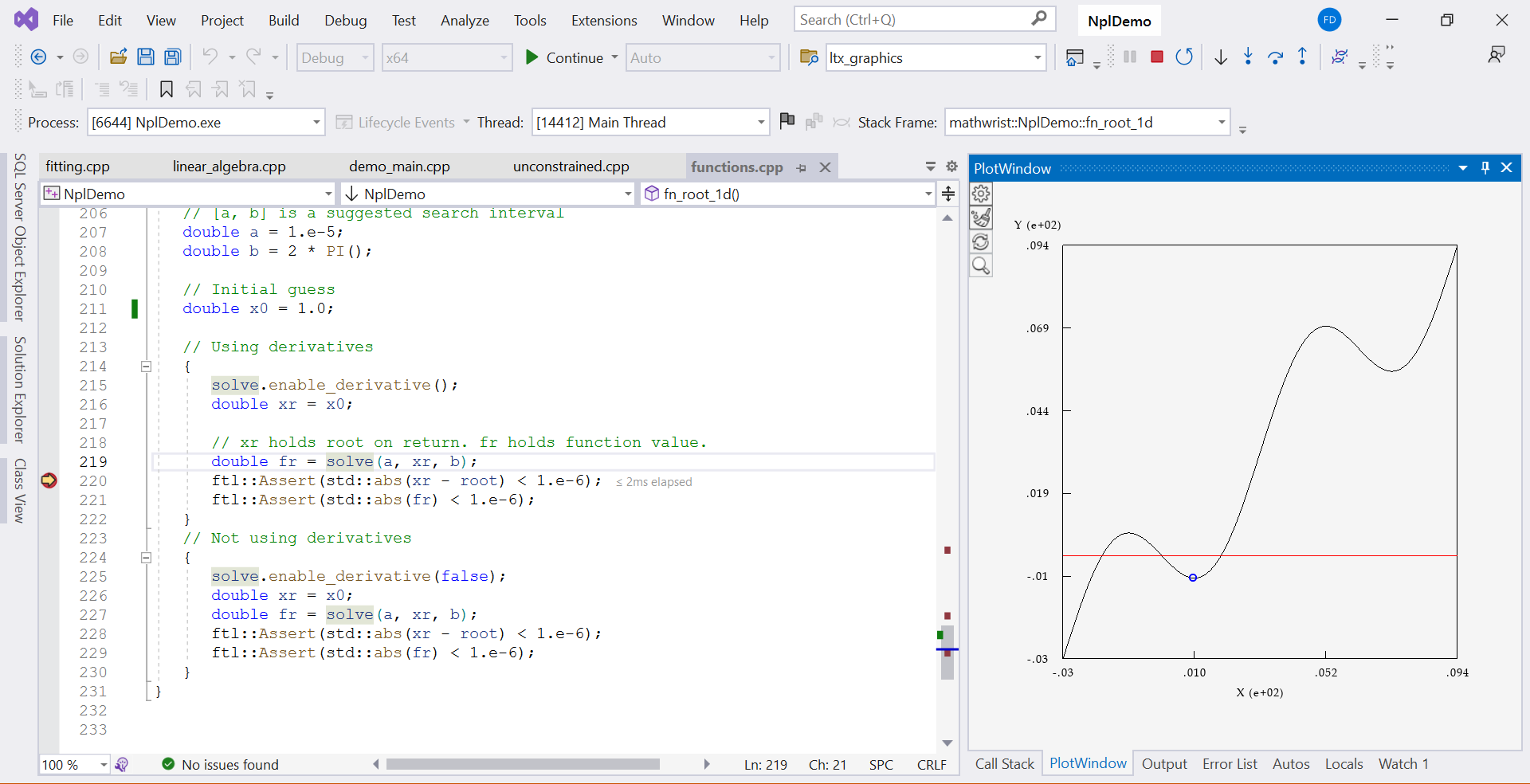

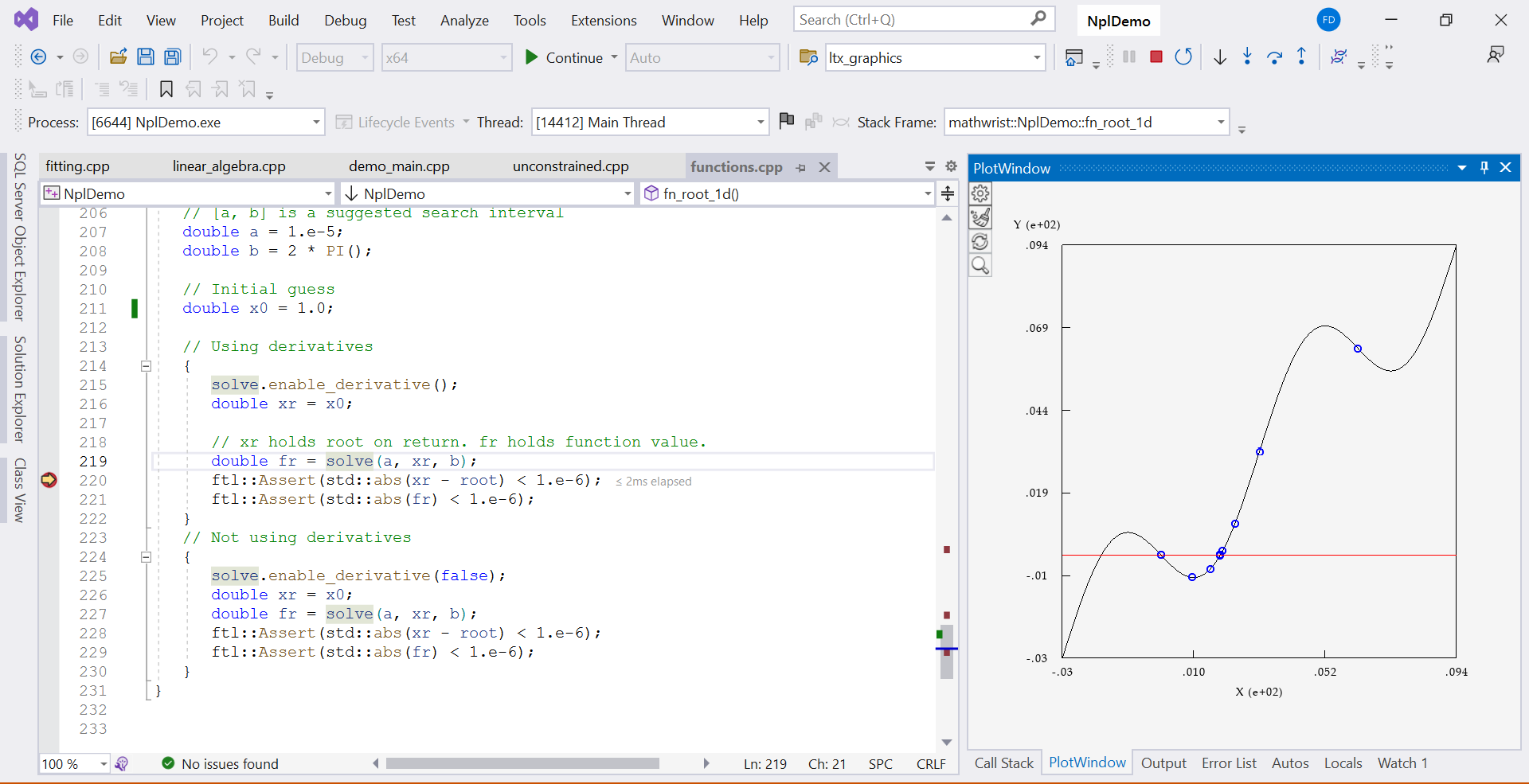

Code Listing 1 is a 1-d root-finding code example in the demo project that we shipped together with NPL installation. From line 3 to 14, an objective function Fx is defined locally. At line 16 and 17, we create an instance of Fx and pass it to the root finding solver. Note that at line 18, enable_watch() is called. This tells the solver to keep track of root finding iterations for dynamics display. After line 36 returns from the actual root-finding work, we set a break point at line 37.

When the debuggee program stops at line 37, right click variable name solve and choose “plot solve” from the context menu. GDE plot window then starts to display an animation that plots the objective function as a smooth curve and a series of data points converging to the actual root. The start and end of this animation plot are illustrated in Figures 2 and 3.

3.2 Optimization

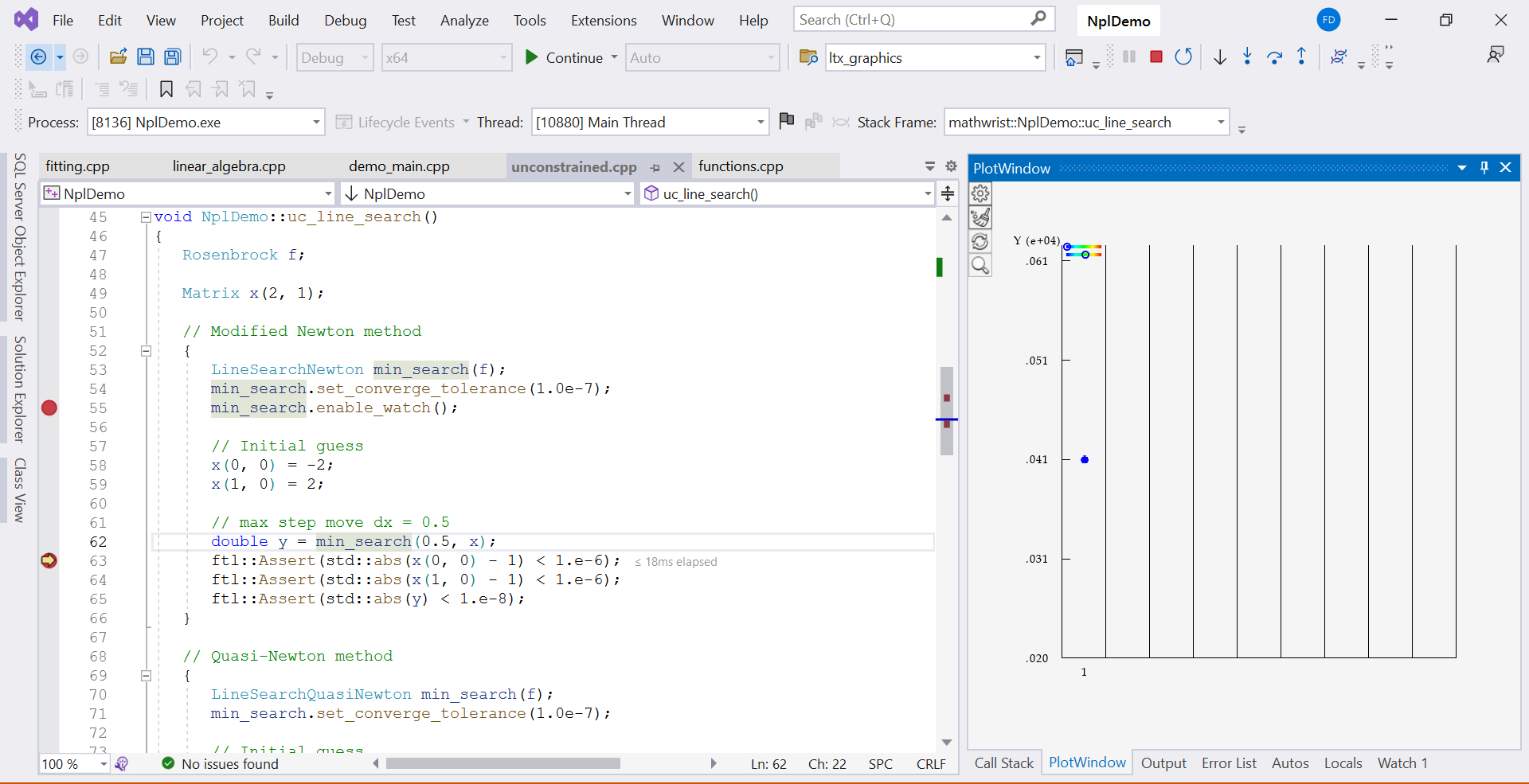

Code Listing 2 is an example in the same demo project that uses line-search methods to solve an unconstrained optimization problem. From line 2 to 32, an objective function Rosenbrock is defined. Line 36 creates an instance of the Rosenbrock objective function. Line 41 passes the objective function to the line-search solver object min_search.

Again, at line 43, we need call the enable_watch() function on the solver so that it saves necessary information for dynamics display. At line 50, the solver returns from the optimization work. We set a break point at line 51.

When this debuggee program stops at line 51, right click on solver name min_search and choose “plot min_search” from context menu. GDE starts the dynamics animation in a series of frames in Figure 4. Each frame corresponds to an optimization iteration with the iteration numbers displayed along the axis at the bottom. The numerical scale of is displayed vertically along the axis on the left of the dynamics plot. The end of the animation are shown in Figure 5.

A single optimization frame is illustration in Figure 1. It corresponds to the second iteration of the optimization process (iteration number 2 pointed by the red arrow). The objective function value at this iteration is represented by the blue dot (blue arrow annotation). Lastly, independent variable and its numerical range is represented by a small circle on a horizontal color gradient strip (shown in green arrow annotation). In this example the independent variable is 2-dimensional, hence we see two color gradient strips at the top of each frame. The location of a circle within its color gradient strip encodes the relative value of an independent variable within its numerical search range.

4 Animation Operations

When a dynamics animation is running, user can click the plot area to pause the animation. Click the plot area again will resume the animation. At any point of a dynamics display, users can right click the plot area to request GDE to immediately terminate the animation.